Hello Edward, as discussed here is an overview of our process along with the data we have cleaned so far.

Raw Data Download 18MB- Data for FotM

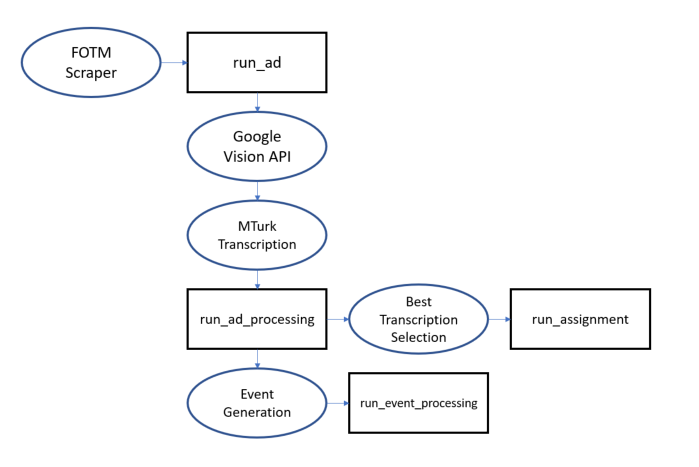

-Our scraper has pulled your ads, events, runaways, newspapers and other tables and descriptors from your website and stored them on our SQL server. I will add to this an additional process which I will develop to separate from PDFs the new ads you are going to send us.

-Ads are first sent through the Google Vision API (https://cloud.google.com/vision) to get a first draft transcript.

-The first draft transcript is sent to Amazon Mechanical Turk (https://www.mturk.com/) for revisions into two distinct final draft candidates.

-The Best Transcription Selector is a program which analyses each final draft candidate and picks the best one to be chosen as the final draft.

-Once an ad_id has a final draft attached it is run through the Event Generator which uses cosine similarity to match the ad to an existing group of ads or create a new event if no match is found.

Ideally I would like to use an AI for feature extraction from the ads but for any feature or ad where the AI fails to deliver what we want we will use MTurk. I’ve attached what ad cleanup we have to far. Ad_ids are the same as yours so you can join with your database if you want to pull further details, for this query I kept the columns simple. There are 27,420 ads, where your transcription is available it is populated in the ‘fotm_transcript’. For the remaining ~15k ads we will be running all of these through the process I described above. ‘transcript_vision’ contains the results of the google vision api first draft, some are pretty good, others need more work. ‘lang’ describes the language detected by google, we are focusing on the English ads for now and will visit the French ads next. ‘transcript_turk’ contains the final draft after going through MTurk, there are about 1k of these, another 1k are done but their batches on MTurk have not completed yet so as soon as those finish ill download and send an update. About 1k ads will need new images due to inadequate size you can identify these as the ads with missing data in the fotm_transcript, transcript_vision and transcript_turk fields, or by multiplying img_px_w and h and filtering by < 20,000. Event_id is our unique identifier generated based on ad similarity.

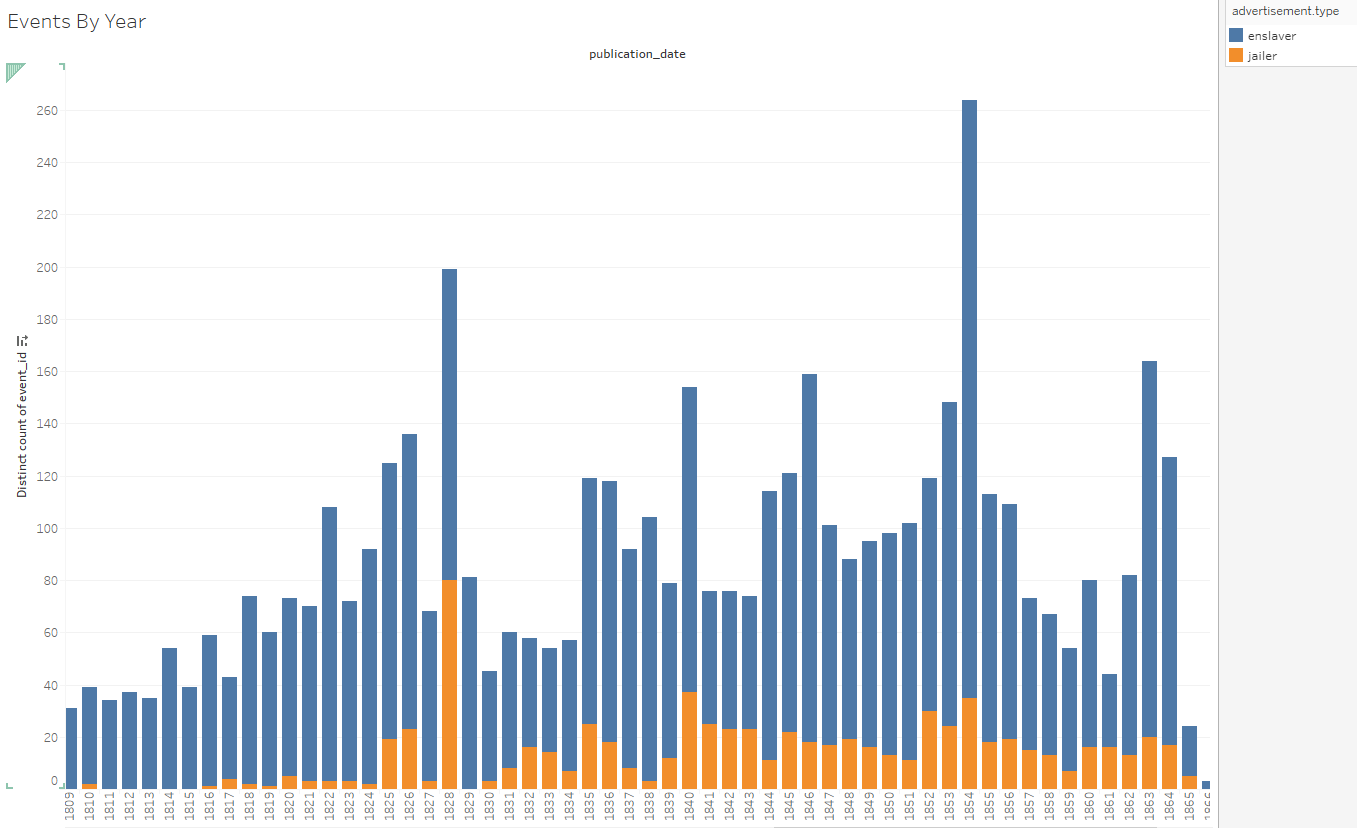

Here are some of the charts discussed in the call:

Anomalous number of events in 1828 and 1854

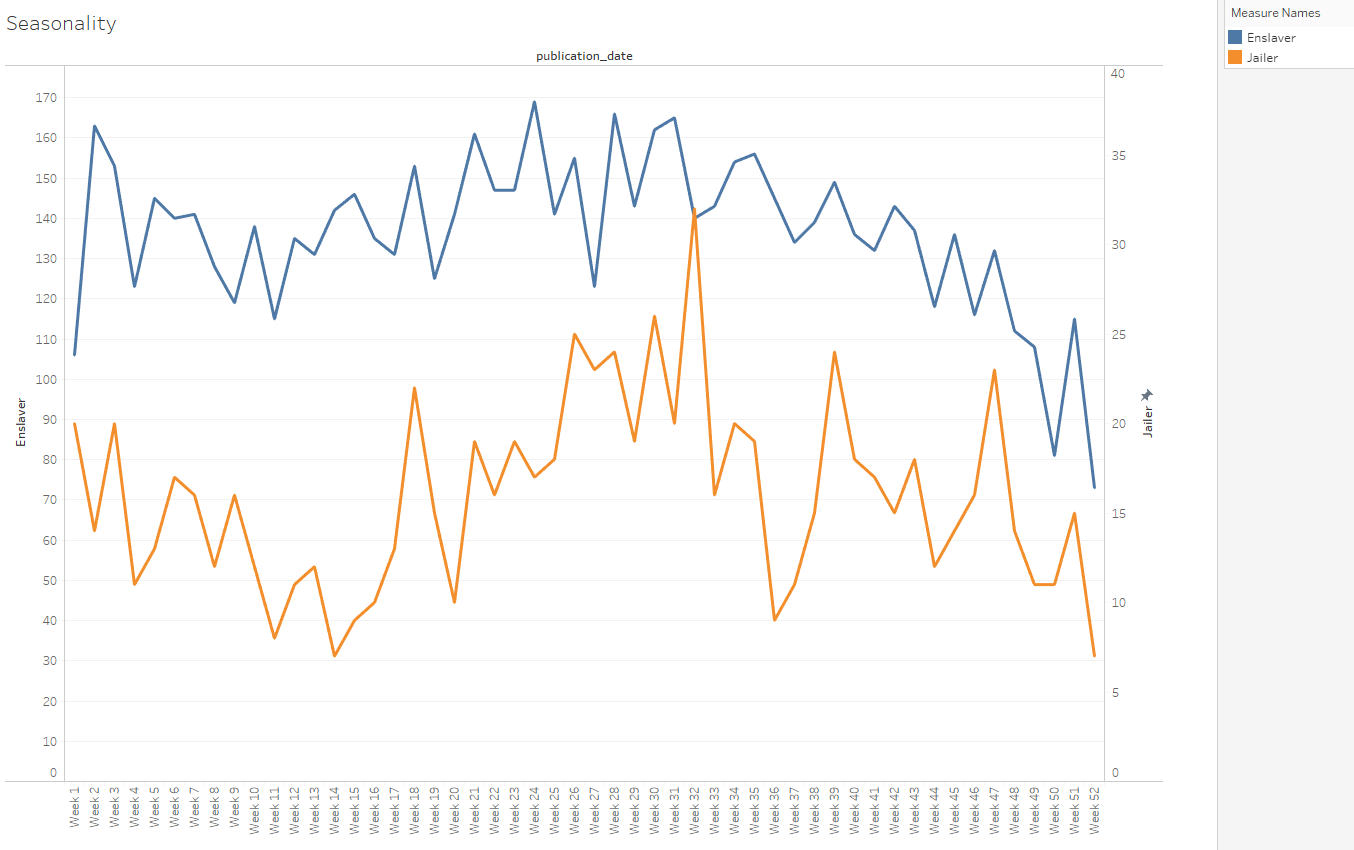

Runaway event seasonality by min publish date.

Obviously a larger sample might change some of these observations.

I’m looking forward to continuing work on this project. I’ll keep you in the loop as I make progress, please reach out at any time if you have questions and don’t hesitate to give me your candid feedback.

Here is a seasonality chart with monthly instead of weekly granularity, it does a better job showing the lag between Jailer and Enslaver ads: